This page collects questions raised by engineering teams of various domains and organizations, about to deploy Arcadia, or already deploying it and needing some support or clarification on the method and its use.

The answers given in the document are those that were proposed to engineering teams and applied by them in a coaching context, with little or no filtering. So they may not be relevant to any context or domain, neither are they accessible to any engineer, but at least they faithfully reflect real life concerns and the way they were addressed.

Warning: These questions and answers are by no means self-sufficient to understand Arcadia and master its deployment. The reader is strongly recommended to read Arcadia reference documents before entering this one.

Jean-Luc Voirin ©Thales 2023

The content of this page is also available in a PDF format: Arcadia Q&A.pdf

Arcadia core Perspectives: Why and How

Operational analysis is an essential contribution to the development of input data for defining the solution. It requires temporarily setting aside expectations about the solution itself, in favor of understanding users, their goals, and their needs, free from preconceptions about the solution.

However, its most important use is to stimulate a deeper understanding and definition of the need beyond the customer's requirements alone, achieved through a critical analysis of the formalization represented by the operational analysis.

Much of the added value of operational analysis lies in the "analysis" aspect rather than just capture or formalization, as it stimulates different perspectives, opportunities, constraints, and risks that might not otherwise have been taken into account. It can lead to proposing new capabilities that the system could offer, new services expected from it, as well as operational contexts and previously unforeseen risk situations.

For example, in many operational analyses, the first-level description is often not very different across missions. This is normal, but to be useful, it is necessary either to refine it to reveal differences at a finer level, which will feed the reflection (not recommended a priori), or to ask the question: "where are the differences, on which entities and activities do they occur, what constraints do they impose?" The difference may lie in constraints, non-functional properties, quantitative elements of scenarios, nature of information and time scales, etc., which will then be essential for the performance of the solution in real operational context.

Furthermore, the behavior of cooperative entities is often well described in operational analysis (although often generic, see above), but the behavior of other actors (threats, objects of interest, or non-cooperative actors in particular) is often not. However, there is a good chance that many of the constraints and expectations on performance come from the specificities of their behavior, their temporal evolution, their own goals and capabilities, etc. All of this must be captured and analyzed within the framework of operational analysis.

It is then essential, of course, to compare the analysis of the system's needs (and beyond) with this analysis and to evolve one or the other if necessary.

A good way to start is to forget about modeling and instead focus on "telling a story" that describes the needs, expectations, and daily lives of end users and stakeholders.

This can be done using existing documents, observing stakeholders using current systems and means, or conducting interviews ("what is your job? Tell me about a typical day or mission. What makes your activities easier? What complicates or jeopardizes them?..."). At this stage, the formalization is limited to writing free text and checking that they are representative of the need.

- Establish the dimensioning situations and scenarios, the required capabilities to detect/face them and their dependencies, the induced constraints that will later influence the system

- Put these capabilities into a time perspective (capability roadmap)

- Define the operational doctrines, concepts, and roles of the actors, associated operational procedures

- Evaluate new situations, along with gaps and discrepancies in the capabilities of the existing system relatively to the goals to be achieved

- Identify opportunities that will then feed the definition of solution alternatives in response to this need

- Define the conditions for the future operational evaluation of the solution to be developed: expected properties, constraints, operational scenarios, etc.

Secondly, identify the key words in the previous material and determine for each how it could be represented in the operational analysis model (if relevant):

- missions and capabilities,

- operational entities/actors and their links,

- activities carried out by these entities and interactions between them,

- operational information and domain model,

- operational processes, temporal scenarios,

- mission phases, operational modes,

- engagement rules, etc.,

- programmatic vision (capability increments...).

Finally, build an initial model on this basis and validate/extend it with stakeholders.

Here are the types of questions that can be asked during the development of the OA, which can either lead to completing it or deriving elements for the rest of the architectural definition (not exhaustive!):

- For each major activity and stakeholder (entity or actor), what makes it different from others? What makes it effective, what hinders it? What does it need to improve its effectiveness? What are the opportunities to provide other outputs, to enrich the service rendered - possibly with additional inputs?

- For each activity, who else is likely to carry it out (entity, actor, other activity)?

- For each interaction between activities (and entities), what could disrupt it? Under what conditions does it occur? Who else besides the identified destinations could benefit from it? Who else besides the identified sources could provide it, or provide complementary elements? And would that be desirable?

- What disruptions are likely to occur in the activity or its inputs? What unintended uses could be made of its outputs?

- What are the conditions of overlap or exclusion with other desired, imposed, and suffered activities? What is the link with any operational modes and states of entities?

- What data or information, activities, actors or entities, interactions, etc., are most operationally important, according to the main points of view (value for the operational mission, criticality from a safety and security perspective...)?

- What representative operational scenarios and processes describe the desired use of operational activities, interactions, etc., to contribute to associated missions and capabilities ("sunny days scenarios"), and how do they fit into time (timing of the mission, operations, expected system lifecycle, etc.)?

- What unwanted operational scenarios and processes should be avoided ("rainy days scenarios")?

- What constraints apply on each element (especially non-functional ones: performance, security, safety, operational importance, value...)?

- What uncertainties, sequence disruptions, contextual changes, undesired states, degraded modes are likely to occur, and what consequences will they have on the previous elements?

- How will the needs, constraints, contexts, situations, processes, etc., evolve over time?

Important note: there are many other analysis and creativity approaches, such as CD&E, design thinking, approaches like C-K, and methodological approaches supporting enterprise architectures such as NAF V4, which would be useful and which the analysis of OA does not replace (and which I also recommend considering). But on the one hand, they are complementary and do not cover the same needs or the same scope of reflection as Arcadia, and on the other hand, their data should mutually nourish each other with that of OA.

Based on operational analysis (OA), system needs analysis (SA) will define the contribution of the solution (referred to as the "system" hereafter) in terms of scope and expectations, particularly functional expectations. In other words, what services should the system provide to users to contribute to operational capabilities?

When conducting system needs analysis from operational analysis, the following questions should be asked:

- "Among the operational capabilities, which ones concern the system and/or its operators/users?"

- "How can the system contribute to each operational activity (from OA)? What services (or functions) can be derived from it, whether they are expected from the system, users or operators, or entrusted to other external systems?" This may also generate new features to offer or different system contribution alternatives.

- "For each interaction between operational activities, should/can the system be involved? In what way (displayed to the operator or treated internally...)?" This can also generate new services or expected functions.

- "For each function that is expected from the system, how can each operational activity or interaction benefit from it? How can it constrain or influence this function?"

- The same applies to operational processes and scenarios, states and modes... "How should the system assist users in each operational process, scenario, in each of their states and modes of operation? What constraints coming from these elements apply to the services it must provide?"

Study the conditions of overlap of several scenarios, states and modes, and how this overlap can alter them: new activities, need for parallel activities, separation of an activity into several... and associated functions.

The separation between OA and SA, in addition to the benefits mentioned above, also allows the first major choices of engineering to be formalized and analyzed, particularly in the case of a new product or product line.

In these situations, several ways of meeting the same operational need may appear: different distributions of contributions between the system and its environment (use of other systems, for example), more or less advanced automation entrusted to the system, etc.

Engineering is therefore required to make an initial choice, not of design, but of delimiting the need allocated to the system.

It may then be wise to explicitly capture the different alternative terms, either through variability in the same SA, or in several SA candidates to be evaluated to select the most appropriate one for the context.

A good definition of the human/system interaction is important, particularly regarding the users or operators of the system, both for communicating with the client and for preparing for integration, verification, and validation (IVV). To achieve this, you can ask yourself the following questions:

- Do the exchanges between operator/user and system accurately translate all the actions the user must take towards the system to carry out their operational activities?

- For each user activity that uses expected system elements, does it provide the feedback the user needs?

- Does the labeling of system and user functions accurately reflect the part that each of them takes on?

- Are there multiple system implementation contexts (e.g. novice or expert user; or multiple levels of automation...)?

- In which cases does the user initiate the interaction, and in which does the system initiate it (e.g. alert, end-of-task notification...)? What information should the system present in the latter case?

- Between two interactions with the system, does the user need to make a decision that will condition the rest (in which case, it may be good to display it as an exchange between two operator functions, to make the Functional Chains or scenarios more meaningful)?

- Can the user be interrupted and resume their activities later, and if so, are there any constraints? In this kind of case, it would be preferable to divide the operator activity into several functions...

- Is the level of description of user activities and interactions necessary and sufficient to define and execute the verification/validation procedures?

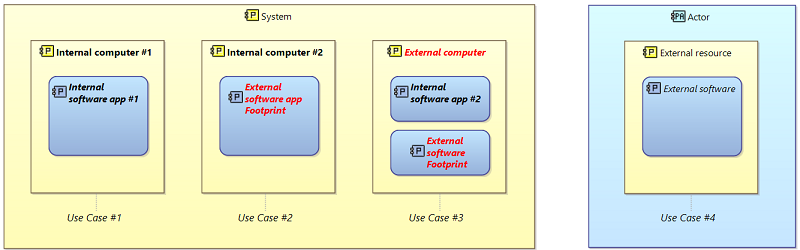

In some (mainly software oriented) modelling approaches, what is called a "logical" architecture is a definition of (software) components and assembly rules, while "physical" architecture is a description of one or more deployments of instances of these components, on execution nodes and communication means.

This is not the case in Arcadia: the major reason for logical architecture (LA) is to manage complexity, by defining first a notional view of components, without taking care of design details, implementation constraints, and technological concerns - provided that these issues do not influence architectural breakdown at this level of detail.

By this way, major orientations of architecture can be defined and shared, while hiding part of the final complexity of the design, and without dependency on technologies. As an example, some domains or product lines have one single, common logical architecture for several projects or product variations.

In fact, logical architecture should have been named 'notional architecture', or 'conceptual architecture', but we had to meet existing legacy denominations.

Physical architecture (PA) describes the final solution extensively: functional behavior, behavioral components (incl. software) realizing this functional contents, and resource implementation components (incl. execution nodes).

Physical Architecture provides details what have not been taken into account at LA level, in order to give a description of the solution ready to sub-contract, purchase or develop, and to integrate. So all configuration items, software components, hardware devices... should be defined here (or later), but not before.

For this reason, maybe physical architecture would be better named as ‘finalized architecture’…

As you can imagine, then, for one logical component, we can often find several physical components, relation between logical and physical levels being one to many. Similarly, functional description of component is notional in LA, and detailled enough in PA so as to sub-contract it.

Consequently, for example, it is much easier to start defining an IVVQ strategy or a product variability definition, on the limited number of functions and components mentioned in LA, to quickly evaluate alternatives and select best ones. Once this is done, the former definition can be checked, refined and applied to the fully-detailed description of PA.

For the same reason, once they have finalized their physical architecture, many people feel the need to synthetize it in a more manageable and shareable representation, grouping some components and functions, hiding others, etc. Which is simply building a posteriori their initially lacking logical architecture!

However, if really the level of complexity does not require two functional levels for the description of the solution, then the following recommendations should be followed:

- If an implementation view is not needed, then an LA is sufficient without a PA.

- If an implementation view is needed, then it would be better to put the detailed functional description in PA to have a regular model (and in Capella, the viewpoints, query engines, and other semantic browsers will thank you).

Arcadia language specifics

A capability is the ability of an organization (incl. Actors & Entities) or a system to provide a service that supports the achievement of high-level operational goals. These goals are called missions.

A mission uses different capabilities to be performed. A capability is described by a set of scenarios, and functional chains (or operational processes in operational analysis), each describing a use case for the capability.

In Arcadia, there are operational missions and capabilities in OA (Operational Analysis), as well as system missions and capabilities (including users) in SA, LA, and PA (System Need Analysis, Logical, Physical Architecture). An expected operational capability of an actor should, if the system contributes to it, be derived (via traceability links) to one or more system capabilities in SA.

In Capella, there should also be missions in both OA and SA, as provided for by Arcadia.

To understand what the concept of mission and capability covers, we can use the analogy of an employee in a company:

- The company assigns a mission to the employee (often summarized in the job title).

- The company ensures that the employee has the required capabilities to fulfill this mission (their skills, as listed on their CV).

- The employee performs daily activities (analogous to functions or operational activities), which are carried out by applying processes and procedures (functional chains or scenarios).

- Just as a (hiring) job interview validates the required skills, IVV will verify the capabilities of the solution.

- We can see that capabilities do not represent everything an engineer can do in their daily work; this falls under operational activities or functions, depending on whether we model in OA or SA, LA, PA.

- Therefore, Capabilities have more "added value" than activities or functions, and are directly related to a goal. This is why there are generally a limited number of capabilities in the model, and why they structure IVV, i.e. the adequacy of the system to the client/user's needs.

The expected behavior of the system or a component is described in Arcadia by the functional definition constituted by the dataflow (functions, functional exchanges, functional chains and scenarios, modes and states, exchanged data).

A function is fully described by its textual definition (and/or the requirements associated with it), its possible properties or attributes and constraints, the data it needs as inputs and those it is responsible for providing as outputs, as well as its implications in the functional chains and scenarios that involve it: functional exchanges involved, definition (textual) of the function's contribution in the chain or scenario ('involvements' in Capella).

This functional definition must be made at the finest level of detail necessary for understanding and verifying the expected behavior. It is the functions at this level of detail (called "leaf functions") that are allocated on the components and that define their behavior.

For convenience, both to offer a more accessible level of synthesis and representation, and to structure the presentation of the functional analysis, these leaf functions can be grouped into more synthetic parent functions. But these parent functions have only a documentary role (by categorizing the leaf functions): the reference remains the leaf functions. For Arcadia, they alone carry the definition and behavioral analysis of the architecture.

For example, a functional chain that would pass through a parent function itself instead of its leaf functions would leave a major ambiguity in the model regarding the role of each leaf function and its expectations (data to be produced, contribution to the chain, etc.).

Therefore, in a finalized state of the modeling, all leaf functions should carry the functional exchanges involved and ideally be each involved (as well as its exchanges) in at least one functional chain or scenario.

Most modeling tools offer (mainly) a top-down construction approach based on decomposition (of components, functions), and the delegation of links connecting the previous elements at each level of decomposition. For example, first-level functions will be defined, connected by exchanges, then sub-functions will be inserted "into" each function, and a delegation link will be placed between a sub-function and the end of each exchange connecting the mother function to its sister functions.

In addition to the added complexity if a sub-function needs to be moved from one level to another, or if exchanges need to be added from the sub-functions, experience shows that this approach is neither the most natural nor the most common. For example, when modeling textual requirements, each of these requirements will result in the creation or enrichment of leaf functions, and exchanges will naturally occur between these leaf functions. One may then want to group these leaf functions into parent functions, but without having to go back and recreate all the exchanges to link them to the first-level functions with delegation links at each level. This is rather a bottom-up approach, and most often a combination of both (middle-out) is used.

This is why Arcadia recommends that, in a finalized state of modeling, only leaf functions carry exchanges, directly between them and without delegation. Thus, in a bottom-up approach, it will suffice to group leaf functions into a parent function at as many levels as desired; in a top-down approach, it will suffice to create sub-functions and then move each exchange from the mother to the sub-function that will take care of it.

Arcadia and Capella then propose automatic synthesis functions, only in the representation, to display diagrams in which the exchanges of the sub-functions are reported on the parent function if it is the only one visible. Similarly, exchanges can be grouped into hierarchical categories, which allow a simplified representation of several exchanges of the same category into a synthetic pseudo-exchange*.

*Capella supports simple (and multiple) exchange categories, but does not yet support hierarchical categories.

The dataflow describes all the inputs required by each function and its potential outputs. However, these outputs and inputs may not be constantly solicited or used in all the system usage contexts, as defined in particular by its capabilities.

Similarly, the functional dependencies in the dataflow (functional exchanges) indicate that a function may receive its inputs from several other functions that are capable of producing them, but not necessarily all at the same time.

Therefore, the dataflow describes all the possible exchanges between functions, which must not be questioned in the system operation (that's why it's immutable and cannot be modified).

Furthermore, the system is subject to variable usage conditions (or use cases), not all of which may require the full range of production capabilities and exchanges between all functions.

Thus, in one of these use cases (referred to as a context, often described in a capability), only a subset of the dataflow is valid and used. This is what is described by the functional chains and scenarios associated with the capability: which part of the dataflow is used in a given context, and how this subset of the dataflow is implemented. And that's why functional chains and scenarios are described separately from the dataflow itself, and depend on the context (capability) in which they are exercised.

In Arcadia, initially, a functional chain is intended to carry non-functional constraints, typically end-to-end, in a given context (capability):

A functional chain is a means to describe one specific path among all possible paths traversing the dataflow,

- either to describe an expected behavior of the system in a given context,

- or in order to express some non-functional properties along this functional path (latency, criticality, confidentiality, redundancy…).

In order to avoid any ambiguity, especially when allocating non-functional properties to a functional chain (latency, criticality, confidentiality level…), some rules should be defined and respected to precise the meaning of a functional chain contents.

As an example, let's consider the case of a latency that the functional chain must respect end-to-end:

- If it is a question of expressing a need (specification) for a delay between a source event and a consequence, then a ‘1 start - 1 end’ rule is sufficient and should be applied, otherwise there is ambiguity.

- If it is a question of expressing the need for multiple consequences or multiple source events, then the interpretation rule of first or last arrival, etc. must be added (e.g. latency is specified between the first of the inputs, and the output, or the last of the outputs, or the first…).

- If we want to express a latency holding property in these situations as a result of the design, then it must be true regardless of the intermediate inputs; otherwise, if the actual latency depends on additional inputs, this must be specified, but FCs do not have the required expressiveness for the temporal axis, loops, etc., so in this case a scenario, a constraint, text, or a dedicated viewpoint should be used for this expression.

- If we want to calculate latency by analyzing the model, either we use the simple cases mentioned above (‘1I/1O’ or ‘nI/1O’ or ‘1I/nO’ + conventions), or we must define the behavior of each function in addition (output/input dependencies, synchronizations between them, etc.), for example in the form of an extension – and the associated conventions and interpretation rules (model of computation?) must be defined.

Obviously, I would recommend the simplest solution whenever there is no counter-indication to do so.

Note that this may require characterizing/classifying functional chains according to their uses and the associated conventions for each.

Functional Chains Vs Scenarios:

Both can and probably should be used. Functional Chains (FC) are more appropriate to describing a “path” in the dataflow, either to better understand the dataflow itself, or to specify (often non-functional) constraints such as latency expectations, criticality for safety reasons (e.g. associated to a feared event)…

For time-related details, scenarios (SC) are better adapted, but they hide the overall context that dataflow describes.

When FC become complex (especially with several entries and outputs), then understanding their behavior may become tricky, and scenarios could be easier to understand: a rule of thumb could be “if you need to show how the FC behaves, by using your mouse or hands on a diagram, then consider using scenarios.”

Although it is true that scenarios and functional chains are two facets of the same concept, each represents it for a different purpose:

- FC: we see its context and the other available paths within a dataflow; it is rather intended to carry non-functional constraints of the "end-to-end" type;

- SC: highlights the chronology, which is sometimes implicit or ambiguous in FC; can be defined at any level and be partial (i.e. "omit" parts of the FC).

Data flows are there to show dependencies between functions (data required for operation, data produced), especially in order to build and justify the resulting interfaces. So it is indeed "real" exchanges that must be considered and not just pure control without any underlying "concrete" exchange.

I think that trying to transform Arcadia data flows into activity diagrams (AcD) or EFFBD would be a mistake: they do reflect the essential initial objective of expressing the need and orienting the solution (architecture):

- express the "contract" or "operating instructions" of each function (what it needs to operate and what it can provide),

- express the dependency constraints between functions (what F2 needs can be provided by F1, or F3),

- and do so globally (not locally to a diagram, as in an AcD or EFFBD): the function is characterized by the sum of all its dependencies expressed in all the diagrams, and these must be coherent (which is visible and done through the notion of function ports).

This contract or "user manual" of a function may include, as input dependencies, "activation conditions" indicating that the function can only be executed upon explicit demand: the characterization of exchange items as events can, for example, fulfill this purpose. Therefore, a functional exchange can also be activating, for example.

But this is absolutely not a sequencing link in the EFFBD or AcD meaning, a link that simply translates a precedence or execution anteriority; by the way, this often appears unnecessary or even harmful to impose while describing the need or orienting a solution, as it would be an over-specification issue, risking freezing constraints that do not need to be.

Let's take an example:

I can chain the oil change of Mr. Dupont's car after the changing of the coolant when it arrives at my garage because his engine is hot. As a result, I tend to put sequence flows between these two functions in this order.

But for Mr. Durand, who dropped off his car at the same time, his engine is cold when I take care of it after Mr. Dupont's, so I will first change the coolant before doing the oil change. So, pure sequence flows are "contextual" and do not reflect an "intangible" dependence between functions.

Moreover, if we look at the change of the coolant alone, we can hardly see what it would need as input from the oil change. On the other hand, we have overlooked (perhaps by focusing a little too much on control) an essential input, which is the engine temperature, and also the need for a function to control it, or even to cool down/heat up the engine beforehand…

Let us remember that Arcadia was designed for the description and verification of architectures, and not for directly executable processes. As a result, there are different needs and also limits to be put in place: for example, if we were to put a pure sequence link (without any data exchange) between two functions, and each one was allocated to a component located on a different processor, we would introduce an inconsistency in the definition of the architecture and interfaces: without "physical" communication between the two components, there is no way to impose the order of precedence described in this way.

However, it may be necessary to express - separately - the aspects of 'sequence in a given context'; the Arcadia functional chains and scenarios are there to express this aspect, without polluting the dataflow, by separating the concepts well. Pure sequencing information can be added here if needed.

A loop with multiple iterations can be represented, for example, in a scenario (sequence diagram), and even a pure sequence link can be used in a functional chain or operational process (being aware of the risk of inconsistency between sequence links and real interfaces, mentioned above).

Similarly, if necessary, a sequencing function that commands those to be chained (involving an exchange with each of them, of the activation item type) and one or more scenarios that express the behavior of this function in different contexts can be added, thus reflecting the required activation order in each context.

Reminder: Arcadia separately defines the nature (or type) of data that can be exchanged (between functions or between behavioral components) and the use that is made of them.

The data model describes a piece of data independently of any use: its composition (its attributes), its relationships, possibly non-functional properties such as the level of confidentiality for example (via associated properties or constraints).

The content of each exchange is described by the concept of Exchange Item, which groups (and references) several data to be exchanged as a whole, simultaneously and coherently with each other (for example, the three geographical coordinates and the velocity vector of a mobile).

Each exchange carrying an exchange item references it in turn in the functional dataflow (several exchanges carrying the same EI may reference it). But so far, only the nature of the exchanged data has been described, which details the dependency between the functions of the dataflow.

Most often, the need to specify that a data must take a particular value arises to describe the expected behavior in a given context, i.e. in a reference to an exchange of a scenario or a functional chain. In this case, it is the reference to the exchange mentioned by the functional chain or the scenario that will specify the value taken by each data mentioned in the EI*.

Another need also arises when it is necessary to describe a conditional behavior. This is the case for a 'control node' in a functional chain: the condition on the data is expressed on each 'sequence link' leaving the control node.

This is also the case for a 'control functional sequence' in a scenario: the condition on the data is expressed on each control functional sequence**.

*This reference is implemented, in Capella, in the form of a 'functional exchange involvement' for a functional chain, or a 'sequence message' for a scenario; the description of the value on the data can be defined in the 'exchange context'.

**In Capella, the description of the value on the data can be defined in the 'guard' of the 'operand' grouping the exchanges concerned.

Let's imagine that we have one or more clients requesting data (or other information) from a server. At the functional level, we will have a "provide..." function allocated to the server component and a "request..." function allocated to the client component.

At the SA level, we can often limit ourselves to defining a single functional exchange from "provide" to "request" to represent the dependency between the functions, and nothing more.

In LA (and possibly in PA), the natural and most logical rule would be to create two functional exchanges:

- One with the request (e.g., the reference to the data) from the "request" function to the "provide" function

- The other with the requested data from "provide" to "request"

This ensures the readability of scenarios and functional chains, and characterizes the definition of inputs and outputs of each function.

These two exchanges and the associated exchange items (EI) could then be translated into a single behavioral exchange between the client and server (with a convention to be defined, such as the request being directed from client to server, which is consistent with scenario sequence messages and returns). The EI of the component would have an IN parameter, the reference to the data, and an OUT parameter, the data itself, and would ideally be connected to the EI of the functional exchanges.

If we want to save modeling effort and use only one functional exchange, we can use the IN and OUT of the EI to represent exchanges in both directions, with the following precautions (in this case, the convention must be described precisely as it is subject to interpretation):

- Either the functional exchange starts from the "request" function to "provide" to represent a query, and the EI has an IN "request parameters" and an OUT "request result"; this is consistent with the design & development view, as a message or request sent from the requester to the provider; this is what we would like to see on a scenario, with a sequence message and a return.

- Or, in the same situation, the exchange starts from "provide" because it is the direction of data flow, but the convention needs to be defined and seems less natural.

- Or we can use two exchanges to ensure the consistency of functional chains.

In the Arcadia method, not only is it allowed to have multiple exchanges exiting from the same port, but it is even recommended, and even essential, in certain cases, and not just to simplify the work of the modeler (although it does contribute to this):

By definition of a function, output ports express what it is capable of producing, independently of its uses (and thus of the number of uses).

For example, a GPS localization function is capable of providing a position. This position can, depending on the case, be used by one, two, 10... other functions, and this is not the concerns of the localization function. It is out of the question to define as many output ports as there are potential users, because that would mean that its definition would depend on the number of its users and would have to be modified every time a new one arrives...

Therefore, in this case, it has one output port, and only one. Each use of this position will result in a functional exchange between this output port and a port of the function that needs it.

Note that the remark also applies to input ports: they indicate what inputs the function needs to work; even if a function can receive data from multiple sources, if it processes them indiscriminately, then only one input port should be defined, which receives exchanges from various sources.

Linking several exchanges to a port means that they have no a priori relationship with each other: they are likely to occur at any time, independently of each other (such as requests from multiple clients to a server, for example, or sending emails by a server to multiple recipients), and the data transmitted a priori only share a common type (defined in the data model and the relevant exchange item), but they may be different instances.

On the other hand, to indicate that a position calculation function must combine a position from a GPS and another position received from an inertial unit, for example, this function will have two input ports (one for each source) carrying the same exchange item, which will group and describe the coordinates of the position.

If additional constraints are to be specified, such as simultaneous distribution of the same data to multiple recipients, or selective sending..., then dedicated functions for this purpose (split, duplicate...) are used. And if the same output is to be provided with different quality of service (e.g., a position accurate to within 10 meters every 10 seconds and another accurate to within 100 meters every second), two distinct ports will be defined, each with a constraint or a requirement on the quality of service.

Arcadia does not provide for the allocation of functions to implementation components (IC), but only to behavioral components (BC).

It's not so much the allocation of functions to ICs that is problematic, but rather its consequences for exchanges: what happens, for example, if two functions communicate, one on an IC and the other on a BC? Through which ports? What is the concrete physical meaning of this?

Likewise, this makes the meta-model more complex (should functional exchanges be allocated to physical links?), as well as the exploitation of the model by analysis views (safety, security, RAMT, etc.).

In fact, in a number of cases, it may be more appropriate to ask why: for example, if power supply functions are placed in an IC for an electronic system-level model, is it because they need to interact with processing functions? If so, there is probably a behavioral component to define to manage them, interaction "protocols" to define (at the level of exchanges and behavioral components), and routing of these exchanges through physical links, etc.; placing the 'power supply' function on an IC would not solve all of this. And is it really useful at this engineering level? It all depends on how it is used; if the main reason is "documentary" (to remember the need for such a function in later development), attaching a constraint or requirement on the component concerned, qualifying them appropriately (voltages, currents, loads, etc.), would probably be simpler and more useful.

«In physical architecture, a Behavioral Component (BC) could be a hardware component. In this case, why can't we create Physical Links between BCs? With Capella, I'm forced to artificially create 2 BCs.»

«A Behavioral Component could be a hardware component.» Yes, in the sense that a functionality can be realized by a hardware component (e.g. generating a 28V power supply, managing memory – by a MMU...). This component is therefore a behavioral component.

However, even in this case, it is necessary to distinguish between the outputs of the functionality (electrical energy, memory segment addresses...) and the means of conveying them (cables or backplane, address bus...). It is therefore important to distinguish behavioral exchanges from physical links, and to be able to allocate the former to the latter.

Similarly, for components, the interest of distinguishing behavioral components from implementation components lies notably in different non-functional properties: for example, if I want to allocate software components to execution resources, I need to separately specify the resources required by each software component (BC), and the resources offered by each implementation component (IC): computing power, memory, etc., in order to compare them. And I may also want to define different ways of allocating the same BC to different ICs. To achieve all this, it is therefore necessary to have the two concepts separated.

Of course, simplifications are always possible, but it is necessary to make sure that this will not cause other problems, especially for the exploitation of the model by analysis viewpoints (safety, security, RAMT, etc.). It was therefore preferred to systematically create an IC in these situations, which sometimes does require "putting an IC around a BC," but makes the model more regular and easier to exploit.

« If my job is 'power supplies', then a transformer that takes 220V and transforms it into 12V is behavioral, and the physical links are on these components. I don't want to create an Implementation Component (IC or Node) for nothing and have a Behavior Component inside. What would you do for my example?»

I would distinguish:

- a "voltage converter" BC that receives and provides an electrical voltage (AC or DC, to be specified) through behavioral exchanges and carries out the transfer function,

- and a "transformer" IC to which the electrical wires carrying the electrical voltage are connected as physical links.

That way, I could distinguish between a case where the transformer is used as a voltage converter, and another case where the same transformer (IC) is used as a low-pass filter, for example (the distinction being made by the BC).

(By the way, we could also imagine mechanisms in Capella that would assist in this creation of ICs transparently, as is the case for ports today, created automatically as soon as an exchange is created).

The expressiveness of languages such as basic UML or SysML (as available by default in COTS tools), which generally use 'Blocks' for all types of components and functions, is often insufficient, as is the tool support. For example:

- How to differentiate and represent software or firmware components from the boards, processors, FPGAs, etc. that support them, and visualize this allocation in a natural way? How to distinguish the same electrical transformer used as a voltage reducer or as a low-pass filter?

- How to separately specify messages and physical, material links that carry them, while allocating them to each other?

- How to model the routing of messages if they travel through multiple successive or parallel links, or a complex path?

- How to visualize the functional content of components at the same time as the architecture?

- How to represent the functional chains running through the system, and allocate properties to them (latency, criticality...)?

- How to define the modes and states of the system? How to specify the behaviors associated with each state or mode and what they apply to? How to show mode changes in scenarios?

- How to separate and represent the physical data formats coherently? For example, a position from an inertial unit via an ARINC bus, processed in software, then transmitted to the human-machine interface in Java and on an Ethernet bus via XDR... How to show the transport conditions of data sets?

- How to separately represent the need and solution (as is done for requirements in SSS and SSDD/PIDS) and manage their configurations and different lifecycles?

- The same goes for interfaces, preliminary ICDs, ICDs, and IRSs...

- How to automatically generate documentation, taking these different lifecycle stages into account?

- How to exploit models in order to articulate several levels of engineering?

- How to practically perform impact analysis on all this, especially through automatic analysis of the model according to specialized viewpoints such as safety, RAMT, security, etc., if we do not distinguish the different concepts to consider and confront?

For these various points (and many others), Arcadia and Capella, while not perfect or claiming to be exhaustive, offer proven solutions; among others:

- Separation of functional, behavioral structural, and implementation structural levels

- Explicit allocation of one on the other

- Separation of dataflow from functional dependencies and their uses in a given context (via functional chains and scenarios)

- Addition of dedicated concepts for the articulation and consistency between these different views (functional paths and physical paths, configurations and situations of modes and states, etc.)

- Separation and articulation between data (classes), transport units (exchange items), interfaces, exchanges, and ports

Interfaces in the context of Arcadia and Capella are not classes, and the two concepts are clearly distinct:

- Classes ('Data' in Arcadia) are only used to define the data manipulated in exchanges between functions or components. Defining a method for a class in Capella would have no use in the model; it will never be explicitly called in exchanges between components, but only potentially in the internal software code of the component (outside of the Capella model).

- Interfaces structure the services and means of interaction offered by the components:

- An interface is composed of exchange items (EI), each of these EIs defining an elementary service (or event, data flow...) that can be provided by one or more components and used (required) by other components.

- An interface usually has a functional or semantic coherence: for example, for a multifunction printer, different interfaces can be used for the scanning function, the printing function, the photocopy function, printer management, etc. And the printing function interface will offer EIs (services) for choosing print parameters (paper size, orientation, color or B&W), printing the document, but also alerting in case of lack of paper or ink.

- An EI "carries" a group of data (or parameters), each of which is characterized by its membership class and its name in the EI.

- An interface is attached to a component port and contributes to defining its "user manual."

All of these concepts are not necessarily to be used in a given context and may - must - be adjusted to strict needs.

Model building hints

First, is Arcadia adapted to your scope and goals? Do you really target solution building, architecture definition & design and engineering mastering? Or are you mainly addressing better customer need understanding, focusing on customer side-capability operational deployment, programmatic issues… that he wants you to address (possibly together)?

- If stakeholders need mastering is the major expectation of your work, then an “architecture framework” based approach might fit better

- If your purpose is to explore the problem space or the solution space at high level, for orientation or concept definition and experimentation, here again, Arcadia does not fully address this: it will possibly enter the game later

- On the other side, if (architecture-driven) engineering is your focus, Arcadia targets engineering and architecture design, including operational and capability-related considerations that feed engineering – and here it is much better than architecture frameworks for that.

Before starting, it would be useful to list main challenges and expectations of engineering, along with building a ‘maturity map’ of the subjects that your engineering will have to face, so as to orient modelling towards addressing low maturity subjects firstly, when and if appropriate. Driving modelling by its major uses and challenges is key for stop criteria and contents definition.

Some of the most frequent misunderstandings in applying the method lie in:

- Not clearly separating need (OA, SA) Vs solution (LA, PA) ; this leads to cluttering the need description with solution considerations, so it is costly to maintain, difficult to read and approve by the customer; it constrains the solution (because approved by the customer) and prevents from considering other alternatives;

- Therefore, take care to capture only need elements in SA especially, and keep it as small as possible.

- Considering that the functions describing the solution (e.g. in LA) are just a refinement of the need functions (in SA); this is usually not the case, especially if you reuse existing parts of the system, and might “corrupt” your SA or skew your LA ;

- So check consistency between need and solution by traceability links (e.g. between system functions and logical functions, between functional chains and scenarios on both sides…) but don’t try to strictly align both sides.

- Reducing the physical architecture to an allocation work of logical functions and components to hardware implementation components; if so, then your LA will probably be too detailed, thus costly to maintain and unnecessarily cluttered for most users;

- Instead, consider the LA as the introductory high level description that will help presenting and discussing on the major features and alternatives of the architecture, sufficient to reason on major architecture design choices, but not detailing the behavior too much, this being done in physical architecture (where interface definition could be finalized as well). Of course, this can be adapted to your own context, but beware the temptation to over-detail too early.

Other typical pitfalls follow:

- Wrong level of focus on concerns and parts of the system and model: most engineers focus on what they know, and detail this a lot, while neglecting new or low maturity parts.

- You should of course do the other way around, in order to raise possible problems and manage risk as early as possible.

- Addressing Reuse of existing components too early or too late: building a solution from parts without correctly mastering the real need, or at the opposite, designing a solution architecture from scratch, and then trying to insert existing stuff.

- To manage confrontation between existing systems contents and new expectations, when modelling practices are established, existing contents should be described in an initial PA, while new needs should be captured in OA and SA; so confrontation takes place in the LA: LA describes expected new architecture, and compares to physical existing assets; gaps are detected between LA and PA, and the physical architecture is modified accordingly to specify required evolutions.

- Considering the IVV issues lately, waiting for the definition of the solution to be complete.

- Capabilities are often a good way to drive and structure IVV and delivery strategy, while scenarios and functional chains will give you a prefiguration of test campaigns and test suites; the model will then help you identifying the required order of components deliveries, and test means contents, for example. This can be useful during bid phase as well, to size IVV activities and means, and also shape IVV strategy. Your design might also be positively influenced by taking IVV issues into consideration early, to make capabilities easier to verify, to split functional chains according to progressivity of integration, etc.

- Modelling without a clear vision of the kind of engineering problems that you want to solve with this modelling. The criteria for stopping the modelling and its orientation, as well as the guidelines given to each, depend on this answer. For example:

- If it is a question of justifying a development cost, a rough model is more than enough.

- If the interfaces need to be justified, then the functional analysis and data must be detailed, but especially between the components (or actors).

- If you need to secure the reuse of existing assets, the functional analysis must be fine-tuned because it is in the details that reuse incompatibilities are hidden.

- For performance analysis, it is towards the functional chains and the superposition scenarios that we must look first, then the definition of the processing and communication resources, with a functional detail limited to the dimensioning.

- For IVVQ, a homogeneous definition guided by scenarios and functional chains is required; the architecture must be broken down according to integration constraints, dependencies between components and separation of test chains (functions crossed by the tests).

- Regarding roles and responsibilities, just a strong warning: the risk exists that modelling be run beside “the real engineering stuff” rather than at the heart of it; this would result in possible mistakes in model and poor representativeness, but also in architecture and design decisions not relying on the model, thus weaker engineering and poor value for modelling.

- I would recommend that the architect, design authority and major authorities in system design be fully aware of model contents, and justifying their decisions based on it. This means not only monthly reviews, but day-to-day validation and appropriation of the model with the modelling team – or the architect could also be the lead modeler.

Arcadia, based on a component-based approach to architecture construction, relies on functional definition (dataflows + capabilities, functional chains, scenarios) to define and justify components, their interfaces, their expectations, and especially transverse behaviors that involve multiple components (functional chains, states, modes...).

- Components are created by grouping or segregating constrained functions

- segregation/grouping constraints can both be functional and non-functional, but are all expressed through functional definition: safety-related feared events & hazards, reuse, product line, etc.

- Interactions between components are automatically deduced from functional allocation

- via associated data flows that cross component boundaries

- Refinement is applied first to the functional analysis and the exchanges, and therefore automatically applies to components to which functions are allocated. Justification is done through functional traceability with the previous requirement model and requirements

- via choice of precise behavioral design for each expected function, overall optimization between functions, etc.

- The behavior of each component is clearly defined by the scenarios that are allocated to it, as well as the functional content that justifies and specifies its interfaces and scenarios

- Each component is described as a full-fledged subsystem,

- with its scenarios, its interfaces,

- as well as its functional content,

- the functional chains that cross it,

- the process for obtaining the data it provides and the use of the data it requires...

- In a product line approach, variabilities are defined on the functional level (optional functions or functional chains, for example), and their impact on components is visible, traceable, providing an architecture that is easier to design for the product line.

- The overall behavior of the system is always visible, as are transverse functional chains

- since they are based on functional definition, which remains visible and transverse to the definition of the components.

The basic idea of Arcadia for modeling interfaces between behavioral components is to rely on functional definition:

- precisely describe the expected behavior using functional analysis, through functional exchanges (FE) between functions that will be allocated to behavioral components

- group the data to be exchanged together into an exchange item (EI), which reflects the need to exchange them in a coherent and simultaneous manner

- reference the EI in the FE(s) that carry it between functions

- describe the dynamic behavior using functional chains and scenarios using these functions and exchanges

Once the functions are allocated to behavioral components:

- group and allocate the FE into behavioral component exchanges (CE) between behavioral components

- do the same for functional and behavioral ports if necessary (especially to be able to reuse a "stand-alone" component in a library, for example, without external exchanges)

- reorganize the exchanged data and EIs on the FE, if necessary, to construct the elements that will be exchanged between components (messages, commands, requests, or services, etc.) and allocate them to the CE

- group and structure the EIs exchanged by the components, referencing them in interfaces that characterize the conditions of use of the component; allocate these interfaces to the ports of components exchanging these EIs

- describe the dynamic behavior between components using scenarios that involve the aforementioned CE(s)

- describe the communication steps, if applicable, through mode and state machines whose changes are mentioned in the scenarios; each machine being allocated to a component involved in the communication

In summary, we synthesize and group the functional EIs into an interface (by simple reference), and similarly group the FE into a CE.

Finally, when behavioral components are allocated to implementation components:

- group and allocate the CE to physical links (PL) which will carry them between implementation components

As a result, it is possible to have several EIs on a component exchange, originating from one or several FEs. However, if we want to indicate that sometimes we exchange one part, and sometimes another, and we want to show it in the CE, it is preferable to create several behavioral exchanges. In this case, it may be necessary to create categories for grouping the behavioral exchanges for documentary purposes.

Note: The software approach known as "component-based development" (CBD) favors encapsulation; that is, we try whenever possible to use a component by only looking at it from the outside, without having to know how it is made inside, and therefore without having to "open the box" to connect to internal sockets.

The ports and interfaces are there to group, abstract, and provide a view of the "user manual" of the component (at least for behavioral components); they should therefore provide the appropriate "natural" level of detail in the component behavioral exchanges. The detailed interactions should be seen at the functional level (via functional exchanges).

Similarly, according to the principles of CBD, we should only connect high-level components (not sub-components) to preserve the abstraction that high-level components constitute (being able to consider them as a black box). The ports of sub-components would therefore only be connected to the ports of the parent component through delegation links. However, experience shows that there may be exceptions, particularly in more physical or material domains. In these cases, direct links between sub-components are necessary.

Textual requirements are, for most customers, the traditional and most used way of specifying their needs (note that more and more customers use and require models as well). So they cannot and should not be discarded in our process, at least as an input for our engineering and a source of justification links towards IVV for Customer.

However, textual requirements suffer from weaknesses that may impact engineering and product quality:

- They are possibly ambiguous, and contradictory or incoherent with each others, with no formalized language to reduce these ambiguities

- Their relationships and dependencies are not expressed, and being implicit and informal, they may be wrong or contradictory

- They cannot be checked or verified by digital means, in most cases

- They are poorly adapted to collaborative building and reviewing, for the former reasons

- The process of creating links to each requirement (for design, IVV, sub-contracting…) is unclear, without a defined method; the way to verify these links is undefined; the quality of these links proves to be poor in many cases (as discovered lately in IVV)

- Textual requirements (alone) are not adapted to describing an expected end to end system solution (each of them only expresses a limited and focused expectation); they are not adapted to describe a solution

- They alone can hardly be sufficient to describe subsystems need, including usage scenarios, detailed interfaces, performances, dynamic resource consumption, and more

- ...

Models tend to solve these weaknesses, thanks to:

- Defining a formalized language, less ambiguous and shareable, digitally analyzable to detect inconsistencies

- Bringing internal consistency thanks to the language properties and concepts (e.g. linking functions by data dependency, making them coherent with functional chains, allocating them to components, linking a required function to design architecture behavior implementing it, adding non-functional (NF) requirements such as performance or safety expectations on functional chains, etc.)

- Explicitly describing and tooling the process to build, link, analyze model elements above

- Relating all modeled elements to each other into one global view, thus providing means to check their coherency and consistency

- Favoring collaboration by natural, functional structure, and means to confront different views (e.g. capabilities/ functional chains/scenarios, functions and dataflows, data and interfaces definition, modes & states, components, and more)

- Describing need and solution separately, while providing justification and traceability links that can be semantically checked

- Describing solution based on both functional/NF and structural descriptions, functional/NF one justifying structural definition (interfaces, performances, resources usage…)

- Constituting a detailed components development contract (or subsystem specification), including all the former elements

- Easing IVV strategy and justification by directly feeding test campaigns with capabilities, scenarios and functional chains, thus improving the quality and efficiency of IVV

- ...

This leads, internally in our engineering practices, to using models as much as possible to describe both need and solution at each level, while complementing them with textual requirements, either to detail expectations (e.g. to describe what is intended from a leaf function behavior), or to express requirements that are not efficiently expressible in the model (e.g. environmental or regulation constraints).

However, it should be noted that:

- Not all requirements can be represented in the model.

- Those that can do not exempt a textual description of these elements themselves, which becomes more expressive and flexible than the model's formalism. This description may or may not be formalized as a 'textual requirement' object in the traditional meaning.

- Requirements that can’t be represented in the model must still be managed, allocated, and traced between systems and subsystems/SW/HW, AND linked to the engineering elements on which they relate (other requirements of different levels, tests, etc.).

But the key point is to treat these requirements according to their real usage in engineering, and governed by the model whenever possible (for structuring, navigation, justification, etc.).

Therefore, internally, requirements will be mostly model elements, and complementary textual ones. Both will be related to customer requirements by traceability links, allowing justification from test results up to customer requirements, through model elements verification.

Requirements that can be formalized as model elements (hereafter referred to as model requirements) include requirements of the following types:

- Functional, interfaces, performance: the most common, allocatable to elements of architecture model, verifiable by IVV scenarios (demonstration and tests).

- Structural (SWaP (size weight & power), distribution on geographical sites, recurring cost, number of copies, etc.): allocatable to elements of architecture model, often verifiable by inspection.

- Non-functional but related to architecture (safety, security, availability, etc.): allocatable to architecture model elements, in the expression of need (feared events, threats, essential goods, critical functions, etc.) and solution (safety and security barriers, redundancies, degraded modes, etc.), and verifiable by model analysis.

- Non-functional but verifiable by analysis or demonstration (simulation, formal proof, analysis by specialty tools including safety, security, availability, etc.): allocatable to specialized models supporting analysis or demonstration.

Requirements that can hardly be modeled are for example:

- Regulatory requirements: compliance with standards, etc. They can be transmitted to subsystems, but most of the time verified by inspection, therefore linked to little or no engineering assets.

- Contractual requirements: supply deadlines, maintenance duration, repair deadlines, etc. ditto

- Environnement-related requirements : temperature range in operation or storage, resistance to salted spray, etc. ditto

- Requirements directly allocatable to subsystems and without impact on the system: to be dealt with at a lower engineering level

- [Requirements that are modelable by nature (performance, safety/security, etc.), but whose current MBSE practices maturity does not yet allow to do so]: temporarily, they can have the same uses as modelable requirements.

There are at least three major uses for these model-based requirements, as well as complementary associated textual requirements:

- To build a solution that takes into account User Requirements (URs). Arcadia proposes to formalize/confront/connect them in OA/SA, and then to construct a solution traced with respect to this formalization; thus, everything happens in the model if they have been captured and formalized there.

Note: it is necessary to keep the possibility of directly linking model elements located in LA and PA to these URs: to have more accurate traceability, and thus to avoid too much detail in the SA if it was the only perspective that accounts for these URs, while increasing the number of URs that can be modeled. This also applies to so-called requirements which are more likely premature design elements. - To define a Verification and Validation (V&V) process that demonstrates the adequacy of the produced solution with these requirements.

What is actually verified is not these descriptions in the form of textual requirements, but the satisfaction of the expected capabilities, through scenarios and functional chains representing this need, in accordance with the entire model description (including these textual description elements).

The Arcadia approach consists of constructing test campaigns based on the capabilities/functional chains/scenarios, while explicitly specifying and ensuring the link between need and solution (which is not done in the traditional approach), which allows for checking the model elements and associated URs. Thus, we always remain in the model, and there are model/tests links, from which we deduce the UR-Tests links indirectly.

The justification of the solution with respect to URs is done, for model-driven requirements, by "indirection": we check the model elements (via tests based on the capabilities, functional chains and scenarios of the model); once these are verified, we can verify the associated user requirements, and we can generate justification tables, for example, UR-test results. - To define the realization contracts of subsystems. These are constructed from the physical architecture, and any impact and traceability analysis is also done in the model, which is specifying (the model elements are subsystem requirements (SSR)).

If the subsystem is also in a model-driven approach, then we remain in this context, and the SYS/SS traceability is done between SYS and SS model elements. Otherwise, we will generate documents or even requirements exports, under the same conditions as for the client SSS, as described above.

Note: the case of URs that can be directly allocated to subsystems and have no impact on the system should be considered. In this case, the simplest solution is to directly link these URs to the associated SS components in the PA.

In these three uses, I do not see any reason to "take the requirements out of the model" for the engineering level concerned. So for me, the simplest and most productive solution is to manage these requirements (system requirements, sub-system requirements) in the model only, with the appropriate links to URs, which are themselves by default in the model.

This also has the merit of greatly simplifying everything related to reuse, variability in product line, versioning, branch reporting, configuration management, feeding inputs and models of specialty engineering, etc. (all sources of complexity that are still ahead of us...).

The essential point here is to consider and treat these requirements according to their real use in engineering, and to govern them by the model whenever possible (for structuring, navigation, justification...).

To summarize the global approach:

- When analyzing need (SA, maybe OA): “translating” customer/user requirements (UR) to model elements, you link UR with SA model elements when appropriate; these model elements constitute the system requirements, along with complementary textual requirements if needed (linked to model as well)

- When defining the solution architecture in LA and PA, you create links between LA/PA functions and SA functions’ (and functional chains, and other SA elements). You can consider that this brings indirect links between UR and LA PA components (component to LA PA function, to SA function, to UR)

- In some cases, you may directly link LA PA model elements to system or user reqs, if those reqs deal with physical considerations (eg the kind of operating system required by the customer, or environmental conditions…); it is better in this case not to artificially links these textual reqs to SA functions, which would create meaningless need functions, but rather to only link them to appropriate PA objects

- PA model elements, allocated to sub-systems or components, constitute most sub-system requirements; you can add some complementary sub-systems textual requirements to your PA (linked to model elements),

- either to detail PA elements expectations (e.g. to describe expectations on a physical function)

- or to “split” a system req to allocate parts of it to different subsystems

- or to add reqs associated to system design choices.

Here, we mainly consider the requirements received from the client (user requirements, UR), assuming that the main uses of the model are:

- defining the interfaces between subsystems,

- defining the functional expectations of each subsystem,

- making overall design choices,

- driving the system IVV.

- If a requirement concerns the architecture (e.g. realization technology, constraints on a specific component...), then link it to the LA or PA, not necessarily to the SA.

- If a requirement impacts only one subsystem, it is preferable to link it only in the subsystem model (or documents), unless it obviously impacts the system-level SA functional analysis.

- If a requirement can be fully verified at the IVV level of a subsystem, same as above; unless it obviously impacts the system-level SA analysis.

- If deemed really necessary, these requirements can be linked not only to the subsystem model but also allocated to the relevant component representing the subsystem in the system model (in LA or PA, but not in SA).

- If the requirement concerns a functional analysis expectation, several cases are possible:

- some requirements can only be expressed on a functional chain (e.g. end-to-end latency constraint, cyber-security...), or on a scenario

- some requirements are specific to a function independently of its uses (e.g. criticality level), or common to all its uses (explaining and detailing the function behavior, thus detailing the function name)

- some requirements are specific to the use of a function in a given context - capacity, functional chain, scenario, or even mode or state - (e.g. function behavior different depending on whether the system is in manual or automatic mode), and thus should be attached to the use of the function in the functional chain or scenario (the "involvement" in Capella).

- If the requirement concerns data to be exchanged, or a condition of interaction between two functions..., it would be more accurate to link it to the exchange, since it is likely to carry the definition of the associated data, rather than to link it to the source or destination function of the exchange (which allows in particular to find the requirements mentioning a data by going back from it to the EI and FE that carry it and from there to the associated requirements).

What is important is that in a finalized engineering, every requirement is allocated either to a model element, to another engineering element (simulation, study...), or to a subsystem. It would be useless and artificial to constrain or overload the SA to account for requirements that do not constrain either the functional aspects or the system's interactions with the outside world (this may be better understood with an example like "the processors used must be octo-core Core I7 ").

It is important to understand the purpose of the operational analysis and when it comes into play in engineering. The operational analysis is particularly useful when seeking to best satisfy a client's need, without having an imposed system scope, or by seeking innovative ways to satisfy this client's need.

The OA should not mention the system, as it aims to understand the client's need without any assumptions about how the system will contribute to it; this is to avoid too quickly restricting the field of possibilities (which will only be done in System Analysis, by deciding at this level what will be requested from the system and what will remain with the operators and external systems).

The observation is that when the system is mentioned in operational analysis, potentially interesting alternatives in system definition are already excluded.

Let's consider this example: the client's need is to have means to hang a painting on the wall, the trend is to formulate the need as "how to use my drill system, what to do with it". As a result, we find the system (drill) in the operational analysis, so the game is over, no smarter alternative is possible. Operational analysis is not very useful, as it duplicates the role of System Analysis.

What Arcadia (and Architecture Frameworks such as NAF) suggests is to restrict Operational Analysis to what the client and stakeholders need to do: the (operational) capacity to have a localized fixation point in a specific location. Therefore, I am not yet talking about a drill in the operational analysis, but I am trying to analyze the need well: should the fixation be reversible? can the wall be damaged? should the position of the painting be adjustable? what will be the maximum weight of the painting? what will be its size? will it have to be placed and removed frequently? who will ensure the fixation, with what qualification?

From this Operational Analysis, Arcadia recommends a capability analysis, i.e., finding the different possible alternatives and comparing them: here,

- a hole + dowel + drill,

- but also a self-drilling point and a hammer,

- or an adhesive hook or a contact glue…

We analyze the various solutions (compromise facilitated, price, user training...) in subsequent candidate System need Analysis and early Architecture perspectives (SA/LA/PA), and choose the best (best compromise). Suppose it is the drill and dowel; we can now define the system, its system capabilities (fix a dowel) and its functions in final System Analysis (drilling, choosing the diameter, controlling the depth...).

Revisiting the OA with each evolution of technologies can then allow us to propose new products or solutions.

Once again, focusing on the system in Operational Analysis can bias the analysis. Two examples (a bit caricatural):

- If the system under engineering is an execution platform (multi-processors or private cloud, for example): if we focus the Operational Analysis on the platform, we naturally define how each actor interacts with it - at the risk of forgetting that the software applications hosted by the platform also communicate (first) with each other, and that the platform has a role to play in this communication.

The approach proposed by Arcadia or the Architecture Frameworks would consist, in Operational Analysis, of analyzing for each actor with whom and why they communicate - and thus, the "direct" communication between applications actors would appear. By building the System Analysis afterward, we should wonder how the platform system can support this inter-application communication, and we would thus reveal communication services provided by the platform - which would also allow us to properly characterize and size the needs of applications (e.g., broadcasting, events, microservices, etc.). - Another example: a communication management system in an airliner: if the initial OA had been done by integrating the system (wrongly, as you understood ;-)), it would describe the links between the system and the actors (airlines, air traffic control, airport, weather services, etc.), and these links would be characterized by frequencies, protocols, etc.

In this case, when moving to the SA, the added value of the SA compared to the OA is often not seen - rightly so. Furthermore, there are still no means to dimension the complexity of the operator load (e.g. during final approach) or data exchanges.